In October 2015, a data journalist named Walt Hickey analyzed movie ratings data and found strong evidence to suggest that Fandango’s rating system was biased and dishonest. He published his analysis in this article — a great piece of data journalism that’s totally worth reading. Fandango displays a 5-star rating system on their website, where the minimum rating is 0 stars and the maximum is 5 stars.

Hickey found that there’s a significant discrepancy between the number of stars displayed to users and the actual rating, which he was able to find in the HTML of the page. He was also able to find that:

The actual rating was almost always rounded up to the nearest half-star. For instance, a 4.1 movie would be rounded off to 4.5 stars, not to 4 stars, as you may expect.

In the case of 8% of the ratings analyzed, the rounding up was done to the nearest whole star. For instance, a 4.5 rating would be rounded off to 5 stars.

For one movie rating, the rounding off was completely bizarre: from a rating of 4 in the HTML of the page to a displayed rating of 5 stars.

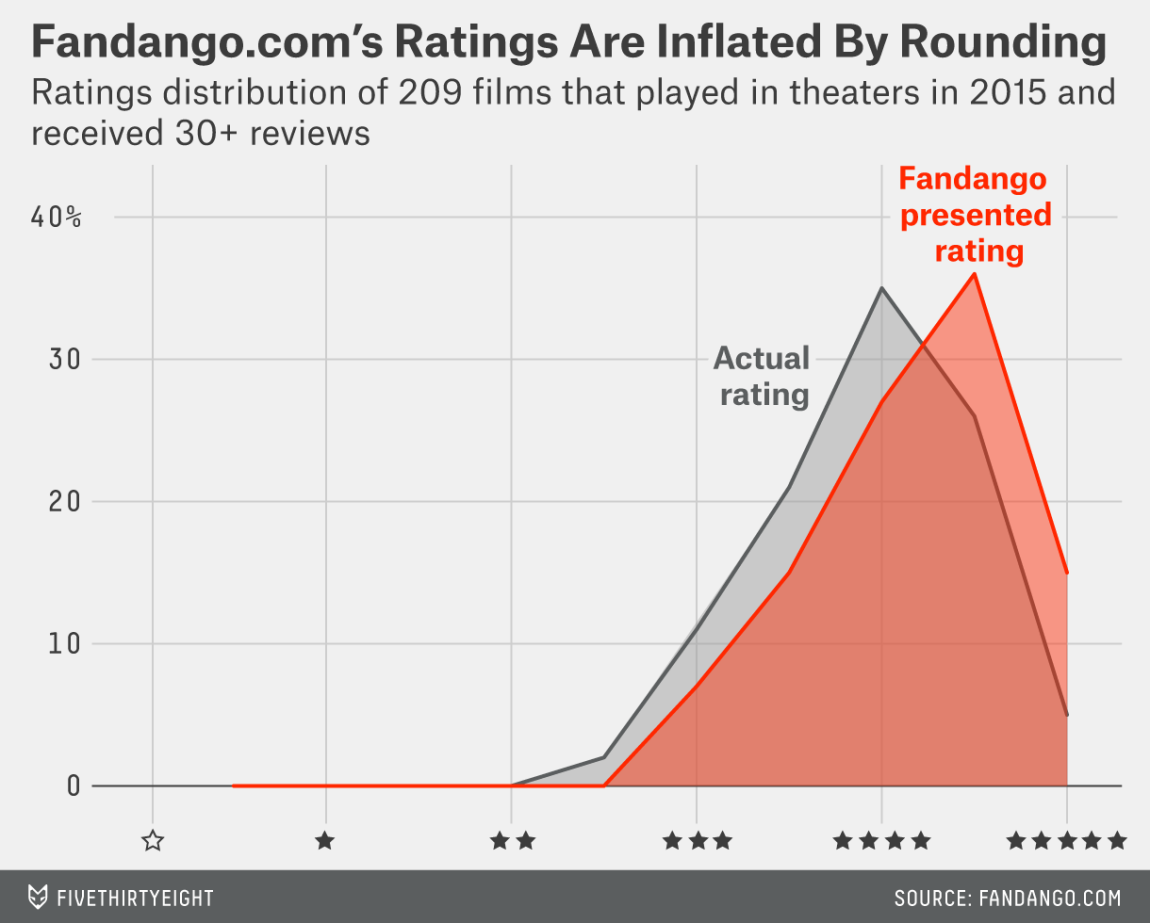

The two distributions above are displayed using a simple line plot, which is also a valid way to show the shape of a distribution. The variable being examined is movie rating, and for each unique rating we can see its relative frequency (percentage) on the y-axis of the graph.

Both distributions above are strongly left skewed, suggesting that movie ratings on Fandango are generally high or very high. We can see there’s no rating under 2 stars in the sample Hickey analyzed. The distribution of displayed ratings is clearly shifted to the right compared to the actual rating distribution, suggesting strongly that Fandango inflates the ratings under the hood.

Fandango’s officials replied that the biased rounding off was caused by a bug in their system rather than being intentional, and they promised to fix the bug as soon as possible. Presumably, this has already happened, although we can’t tell for sure since the actual rating value doesn’t seem to be displayed anymore in the pages’ HTML.

In this project, we’ll analyze more recent movie ratings data to determine whether there has been any change in Fandango’s rating system after Hickey’s analysis.

One of the best ways to figure out whether there has been any change in Fandango’s rating system after Hickey’s analysis is to compare the system’s characteristics previous and after the analysis. Fortunately, we have ready-made data for both these periods of time:

Walt Hickey made the data he analyzed publicly available on GitHub. We’ll use the data he collected to analyze the characteristics of Fandango’s rating system previous to his analysis.

The movie ratings data for movies released in 2016-2017 is publicly available on GitHub and we’ll use it to analyze the rating system’s characteristics after Hickey’s analysis.

# Load the necessary modules for analysis

import pandas as pd

import numpy as np

import seaborn as sns

pd.options.display.max_columns = 100 # Avoid having displayed truncated output

# Read the data from fandango and movies released in 2016-2017

fandango_sc = pd.read_csv('fandango_score_comparison.csv')

movie_16_17 = pd.read_csv('movie_ratings_16_17.csv')

Let us have a look at the first few observations in both datasets.

fandango_sc.head()

movie_16_17.head()

Below we isolate only the columns that provide information about Fandango so we make the relevant data more readily available for later use. We’ll make copies to avoid any SettingWithCopyWarning later on.

# Isolating the columns that offer information about Fandango rating

fandango_before = fandango_sc[['FILM', 'Fandango_Stars', 'Fandango_Ratingvalue', 'Fandango_votes', 'Fandango_Difference']].copy()

fandango_after = movie_16_17[['movie', 'year', 'fandango']].copy()

Since our goal is to determine whether there has been any change in in Fandango’s rating system after Hickey’s analysis; population of interest is all the movie ratings on Fandango website.

Because we want to find out whether the parameters of this population changed after Hickey’s analysis, we’re interested in sampling the population at two different periods in time — previous and after Hickey’s analysis — so we can compare the two states.

The data we’re working with was sampled at the time periods we wanted: one sample was taken previous to the analysis, and the other after the analysis. We want to describe the population, so we need to make sure that the samples are representative of the population, otherwise we should expect a large sampling error and, ultimately, wrong conclusions.

From the readme.md page on Hickey’s dataset repository we see that he used the following sampling criteria:

- The movie must have had at least 30 fan ratings on Fandango’s website at the time of sampling (Aug. 24, 2015).

- The movie must have had tickets on sale in 2015.

The sampling was clearly not random because not every movie had the same chance to be included in the sample — some movies didn’t have a chance at all (like those having under 30 fan ratings or those without tickets on sale in 2015). It’s questionable whether this sample is representative of the entire population we’re interested to describe. It seems more likely that it isn’t, mostly because this sample is subject to temporal trends — e.g. movies in 2015 might have been outstandingly good or bad compared to other years.

The sampling conditions for our other sample were (as it can be read in the README.md of the data set’s repository:

- The movie must have been released in 2016 or later.

- The movie must have had a considerable number of votes and reviews (unclear how many from the README.md or from the data).

This second sample is also subject to temporal trends and it’s unlikely to be representative of our population of interest.

Both these authors had certain research questions in mind when they sampled the data, and they used a set of criteria to get a sample that would fit their questions. Their sampling method is called purposive sampling (or judgmental/selective/subjective sampling). While these samples were good enough for their research, they don’t seem too useful for us.

Modifying the goal of our analysis

At this point, we can either collect new data or change the goal of our analysis. We choose the latter and place some limitations on our initial goal.

Instead of trying to determine whether there has been any change in Fandango’s rating system after Hickey’s analysis, our new goal is to determine whether there’s any difference between Fandango’s ratings for popular movies in 2015 and Fandango’s ratings for popular movies in 2016. This new goal should is a fairly good proxy for our initial goal.

Isolating required samples

With this new research goal, we have two populations of interest:

- All Fandango’s ratings for popular movies released in 2015.

- All Fandango’s ratings for popular movies released in 2016.

We need to be clear about what counts as popular movies. We’ll use Hickey’s benchmark of 30 fan ratings and count a movie as popular only if it has 30 fan ratings or more on Fandango’s website.

Although one of the sampling criteria in our second sample is movie popularity, the sample doesn’t provide information about the number of fan ratings. We should be skeptical once more and ask whether this sample is truly representative and contains popular movies (movies with over 30 fan ratings).

One quick way to check the representativity of this sample is to sample randomly 10 movies from it and then check the number of fan ratings ourselves on Fandango’s website. Ideally, at least 8 out of the 10 movies have 30 fan ratings or more.

fandango_after.sample(n = 10, random_state = 3)

| movie | year | fandango | |

|---|---|---|---|

| 146 | Sleepless | 2017 | 4.0 |

| 25 | Bleed for This | 2016 | 4.0 |

| 163 | The Boss | 2016 | 3.5 |

| 108 | Mechanic: Resurrection | 2016 | 4.0 |

| 83 | Jane Got a Gun | 2016 | 3.5 |

| 197 | The Take (Bastille Day) | 2016 | 4.0 |

| 211 | xXx: Return of Xander Cage | 2017 | 4.0 |

| 77 | In a Valley of Violence | 2016 | 4.0 |

| 34 | Central Intelligence | 2016 | 4.5 |

| 203 | Underworld: Blood Wars | 2016 | 4.0 |

Above we used a value of 3 as the random seed. This is good practice because it suggests that we weren’t trying out various random seeds just to get a favorable sample.

As of November 2019, these are the fan ratings we found:

| movie | Fan Ratings |

|---|---|

| Sleepless | 9671 |

| Bleed for This | 8803 |

| The Boss | 27080 |

| Mechanic: Resurrection | 25576 |

| Jane Got a Gun | 14035 |

| The Take (Bastille Day) | 3786 |

| xXx: Return of Xander Cage | 25179 |

| In a Valley of Violence | 4045 |

| Central Intelligence | 54199 |

| Underworld: Blood Wars | 22079 |

90% of the movies in our sample are popular. This is enough and we move forward with a bit more confidence.

Let’s also double-check the other data set for popular movies. The documentation states clearly that there’re only movies with at least 30 fan ratings, but it should take only a couple of seconds to double-check here.

sum(fandango_before['Fandango_votes'] < 30)

Let us explore the two datasets to ensure that they are enough populare movies, to make it worth while.

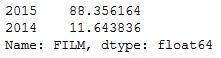

fandango_before.FILM.str.extract('(\d\d\d\d)').value_counts(normalize = True) * 100

We see that 88% of the movies in Hickey’s data are from 2015 but only 12% of the cases are for 2014.

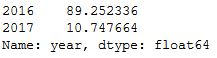

fandango_after['year'].value_counts(normalize = True)*100

We see a similar pattern here where 2016 has 89% of the movies and 2017 has only about 11%.

Because there are so few popular movies in our sample of 2014 and 2017 any analysis we try to obtain from it is probably not going to be representative of the movie ratings. For this reason, it’s probably just best to stick to the years 2015 and 2016.

Let’s start with Hickey’s data set and isolate only the movies released in 2015.

# Extract the Year from FILM column

fandango_before['Year'] = fandango_before.FILM.str.extract('(\d\d\d\d)').astype(int)

# Isolate the movies released in 2015

fandango_2015 = fandango_before[fandango_before['Year'] == 2015].copy()

fandango_2015.head()

# Isolate the movies released in 2016

fandango_2016 = fandango_after[fandango_after['year'] == 2016].copy()

fandango_2016.head()

Compare the Distribution Shapes for 2015 and 2016

Our goal is to determine whether there’s any difference between Fandango’s ratings for popular movies in 2015 and Fandango’s ratings for popular movies in 2016.

There are many ways we can go about with our analysis, but let’s start simple with making a high-level comparison between the shapes of the distributions of movie ratings for both samples.

import matplotlib.pyplot as plt

%matplotlib inline

plt.style.use('fivethirtyeight')

fandango_2015['Fandango_Stars'].plot.kde(label = '2015', legend = True, figsize = (8,5.5))

fandango_2016['fandango'].plot.kde(label = '2016', legend = True)

plt.title("Comparing distribution shapes for Fandango's ratings\n(2015 vs 2016)",

y = 1.07) # the `y` parameter pads the title upward

plt.xlim(0,5)

plt.xticks(np.arange(0, 5.1, 0.5))

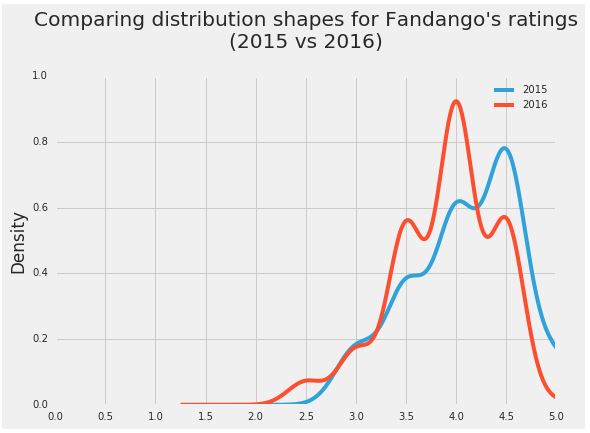

Two aspects are striking on the figure above:

- Both distributions are strongly left skewed.

- The 2016 distribution is slightly shifted to the left relative to the 2015 distribution.

The left skew suggests that movies on Fandango are given mostly high and very high fan ratings. Coupled with the fact that Fandango sells tickets, the high ratings are a bit dubious.

The slight left shift of the 2016 distribution is very interesting for our analysis. It shows that ratings were slightly lower in 2016 compared to 2015. This suggests that there was a difference indeed between Fandango’s ratings for popular movies in 2015 and Fandango’s ratings for popular movies in 2016. We can also see the direction of the difference: the ratings in 2016 were slightly lower compared to 2015.

Comparing Relative Frequencies

It seems we’re following a good thread so far, but we need to analyze more granular information. Let’s examine the frequency tables of the two distributions to analyze some numbers. Because the data sets have different numbers of movies, we normalize the tables and show percentages instead.

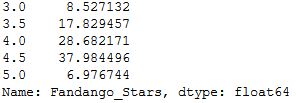

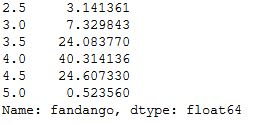

fandango_2015['Fandango_Stars'].value_counts(normalize = True).sort_index() * 100

fandango_2016['fandango'].value_counts(normalize = True).sort_index() * 100

In 2016, very high ratings (4.5 and 5 stars) had significantly lower percentages compared to 2015. In 2016, under 1% of the movies had a perfect rating of 5 stars, compared to 2015 when the percentage was close to 7%. Ratings of 4.5 were also more popular in 2015 — there were approximately 13% more movies rated with a 4.5 in 2015 compared to 2016.

The minimum rating is also lower in 2016 — 2.5 instead of 3 stars, the minimum of 2015. There clearly is a difference between the two frequency distributions.

For some other ratings, the percentage went up in 2016. There was a greater percentage of movies in 2016 that received 3.5 and 4 stars, compared to 2015. 3.5 and 4.0 are high ratings and this challenges the direction of the change we saw on the kernel density plots.

Determining the Direction of the Change

We confirmed with the two tables above that there is indeed a clear difference between the two distributions. However, the direction of the difference is not as clear as it was on the kernel density plots.

We’ll take a couple of summary statistics to get a more precise picture about the direction of the difference. We’ll take each distribution of movie ratings and compute its mean, median, and mode, and then compare these statistics to determine what they tell about the direction of the difference.

mean_2015 = fandango_2015['Fandango_Stars'].mean()

mean_2016 = fandango_2016['fandango'].mean()

median_2015 = fandango_2015['Fandango_Stars'].median()

median_2016 = fandango_2016['fandango'].median()

mode_2015 = fandango_2015['Fandango_Stars'].mode()[0]

mode_2016 = fandango_2016['fandango'].mode()[0]

summary = pd.DataFrame()

summary['2015'] = [mean_2015, median_2015, mode_2015]

summary['2016'] = [mean_2016, median_2016, mode_2016]

summary.index = ['mean', 'median', 'mode']

summary

| 2015 | 2016 | |

|---|---|---|

| mean | 4.085271 | 3.887435 |

| median | 4.000000 | 4.000000 |

| mode | 4.500000 | 4.000000 |

plt.style.use('fivethirtyeight')

summary['2015'].plot.bar(color = '#f39d93',align = 'center', label = '2015')

summary['2016'].plot.bar(color = '#579de3', align = 'edge', label = '2016')

plt.title('Comparing summary statistics: 2015 vs 2016', y = 1.07)

plt.ylim(0,5.5)

plt.yticks(np.arange(0,5.1,.5))

plt.ylabel('Stars')

plt.legend(framealpha = 0, loc = 'upper center')

plt.show()

The mean rating was lower in 2016 with approximately 0.2. This means a drop of almost 5% relative to the mean rating in 2015.

(summary.loc['mean'][0] - summary.loc['mean'][1]) / summary.loc['mean'][0

0.048426835689519929

While the median is the same for both distributions, the mode is lower in 2016 by 0.5. Coupled with what we saw for the mean, the direction of the change we saw on the kernel density plot is confirmed: on average, popular movies released in 2016 were rated slightly lower than popular movies released in 2015.

Conclusion

Our analysis showed that there’s indeed a slight difference between Fandango’s ratings for popular movies in 2015 and Fandango’s ratings for popular movies in 2016. We also determined that, on average, popular movies released in 2016 were rated lower on Fandango than popular movies released in 2015.

We cannot be completely sure what caused the change, but the chances are very high that it was caused by Fandango fixing the biased rating system after Hickey’s analysis.